In case you missed it, you can check out the first part of Unity vs Unreal: Choosing an engine for Space Dust Racing at Part 1: Adventures in Unity.

Unreal GDC

Three of us made it over to GDC this year and after having spent the previous few days participating in the speed dating of game development (also known as Game Connection America), we were leisurely wandering around the GDC expo halls.

We had a chat to the guys at the Unity booth, showed them one of our playable prototypes, then went and said hello to a friend in the Qualcomm booth. Next we stared for a while at a demonstration Allegorithmic had on their Substance workflow, followed by quick laugh at Goat Simulator. Soon after we spotted an odd looking sign on the back of some unknown booth which basically said:

Get Unreal. Full engine and source. $19/MO +5%

After a few exchanges of WTH, we walked around to the front. Sure enough it was the Epic Games’ Unreal Engine 4 booth with a ton of people gathered around, and as many staff members eager to answer the onslaught of questions. After a few more exchanges of WTH with the staff, we joined the queue and had a look at the presentation they had going on in the back. Yep, looked legit. Fast forward a couple of hours to the hotel room, where we had signed up and downloaded a copy to start checking out.

A collection of snapshots taken while in San Francisco attending GameConnection and GDC 2014.

Rendering

For us, when compared to Unity, probably one of the biggest assets Unreal brings to the table is its rendering technology (or networking or source – it’s close). It features modern physically-based shading, full-scene reflections, TXAA, integrated GPU particles, an efficient and easy to use terrain system (including foliage and spline tools), as well as a host of other desired features. Most features are easy to use, efficient and importantly – well integrated.

A good example would be the environment reflection captures that ‘just’ involve placing primitives (sphere or box) into the scene, they are then captured and at runtime are selected, (approximately) re-projected and blended together in a similar screen-coverage-determines-cost way as the rest of the deferred rendering pipeline. In addition they are also blended with dynamic screen space reflections and use the lightmass data to reduce leaking. All in all a pleasurable system to work with.

Another example might be the particle system. The GPU particles are embedded within the standard particle system and support most of the standard particle features, but also feature screen space collisions, vector fields and plentiful numbers of particles. They have also made a good effort to do complexity-decoupled particle lighting.

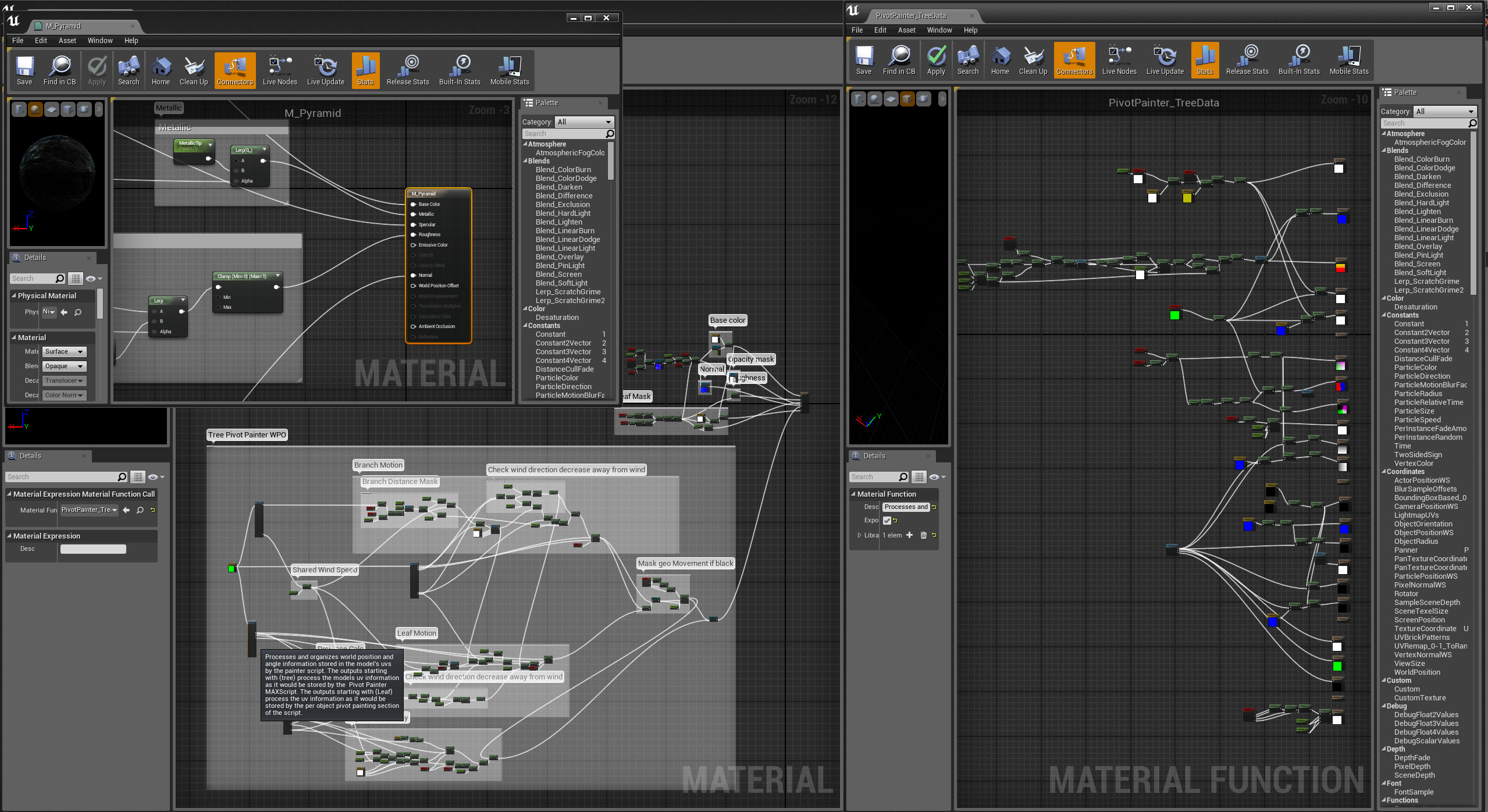

Materials

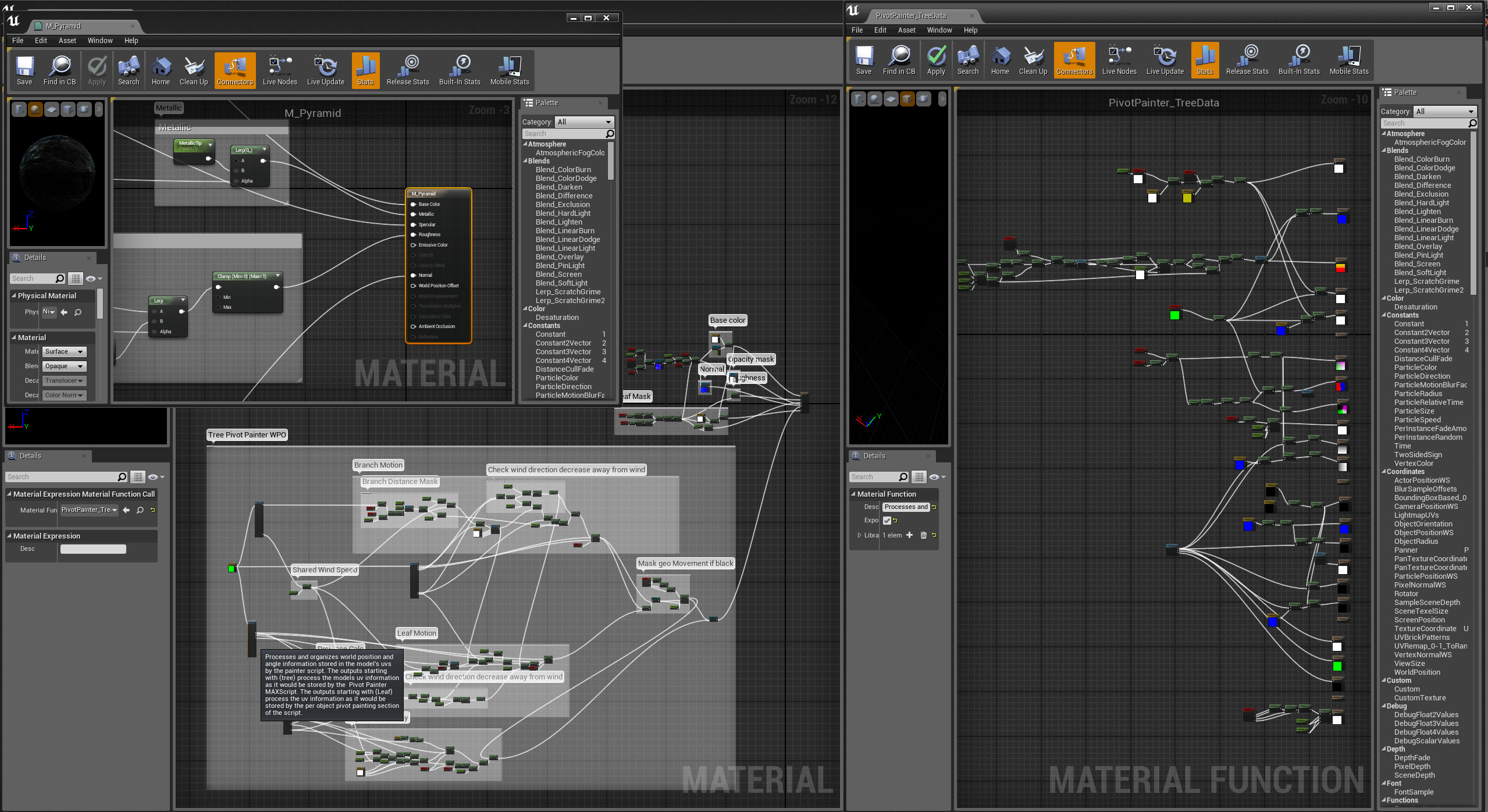

Underlying most of the graphical content is the material system. It is a visual scripting/node graph based interface that covers most of your needs from static and skeletal mesh materials to particles and post processing effects. In order to keep things clean, you can create material functions to group node logic and then expose it in a simpler form. You can also write custom material expression code snippets if you need to use advanced shader features or just want to simplify your node graph.

The material and material function editors.

The completed material graphs are first compiled down to HLSL or GLSL, and then get included by template shaders which basically pick and choose which part of the material code should be included for each type of shader required. It is then fed to the device to be compiled further, resulting in fairly efficient shaders. They have included an option within the material editor to view the generated HLSL code.

If you need to do something more advanced, such as hand optimize, use compute shaders or supply constant arrays, it gets a little less ideal as you’ll need to jump in and start modifying the engine code – however that option is there (first world problems, eh?).

Renderer WIP

Other parts of the renderer can be a bit lacking, missing or just generally incomplete. A single reflection capture is used for each translucency object and it picks it by a simple distance-to-center heuristic, without taking the influence radius into account, which can easily end up selecting non-ideal cubemaps.

In another case we had set out to test the foliage instancing with a few boulder meshes. We followed the lightmap requirements but couldn’t get the lighting to match – only to find that the UInstancedStaticMeshComponent::GetStaticLightingInfo function was empty.

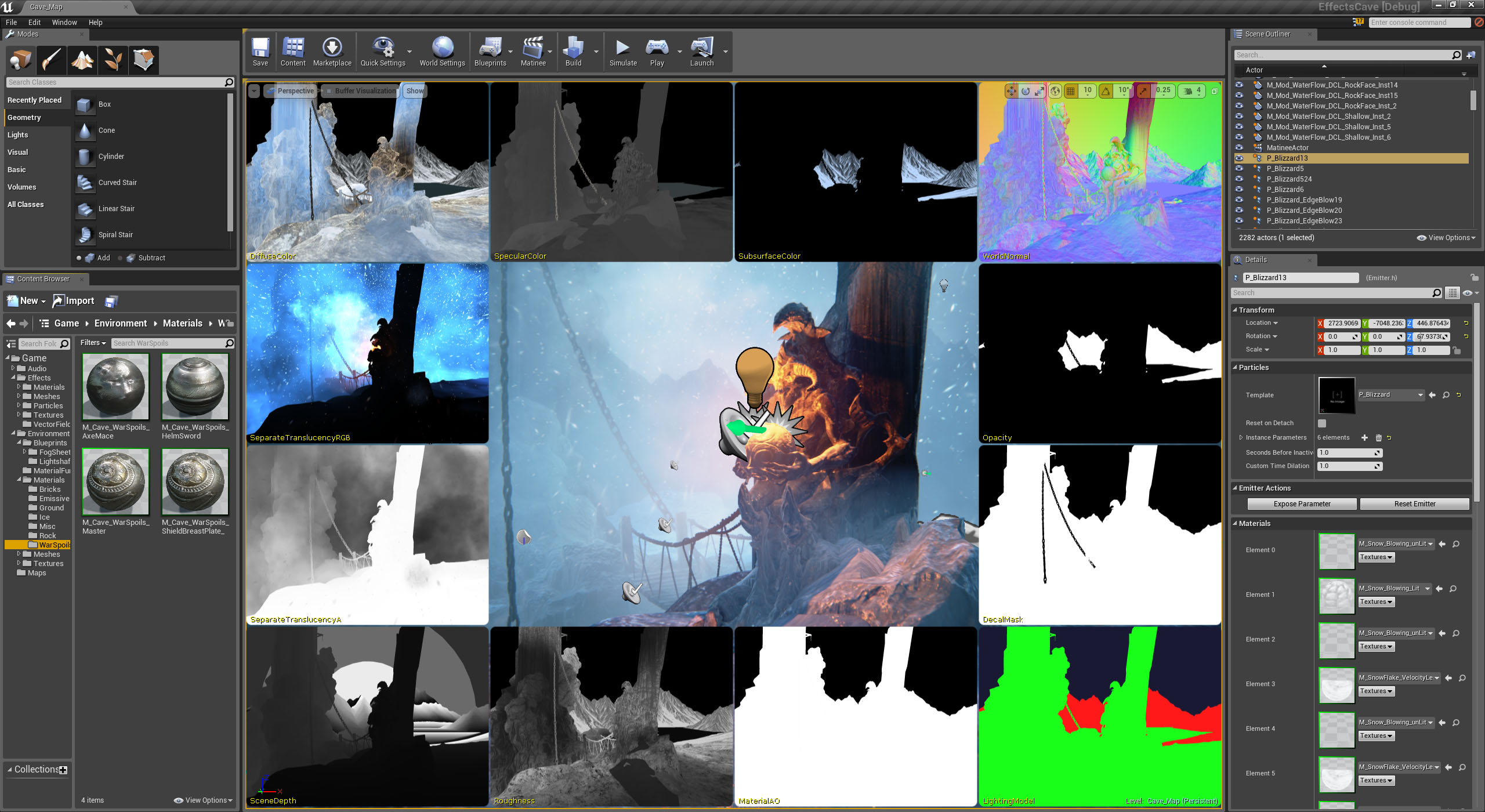

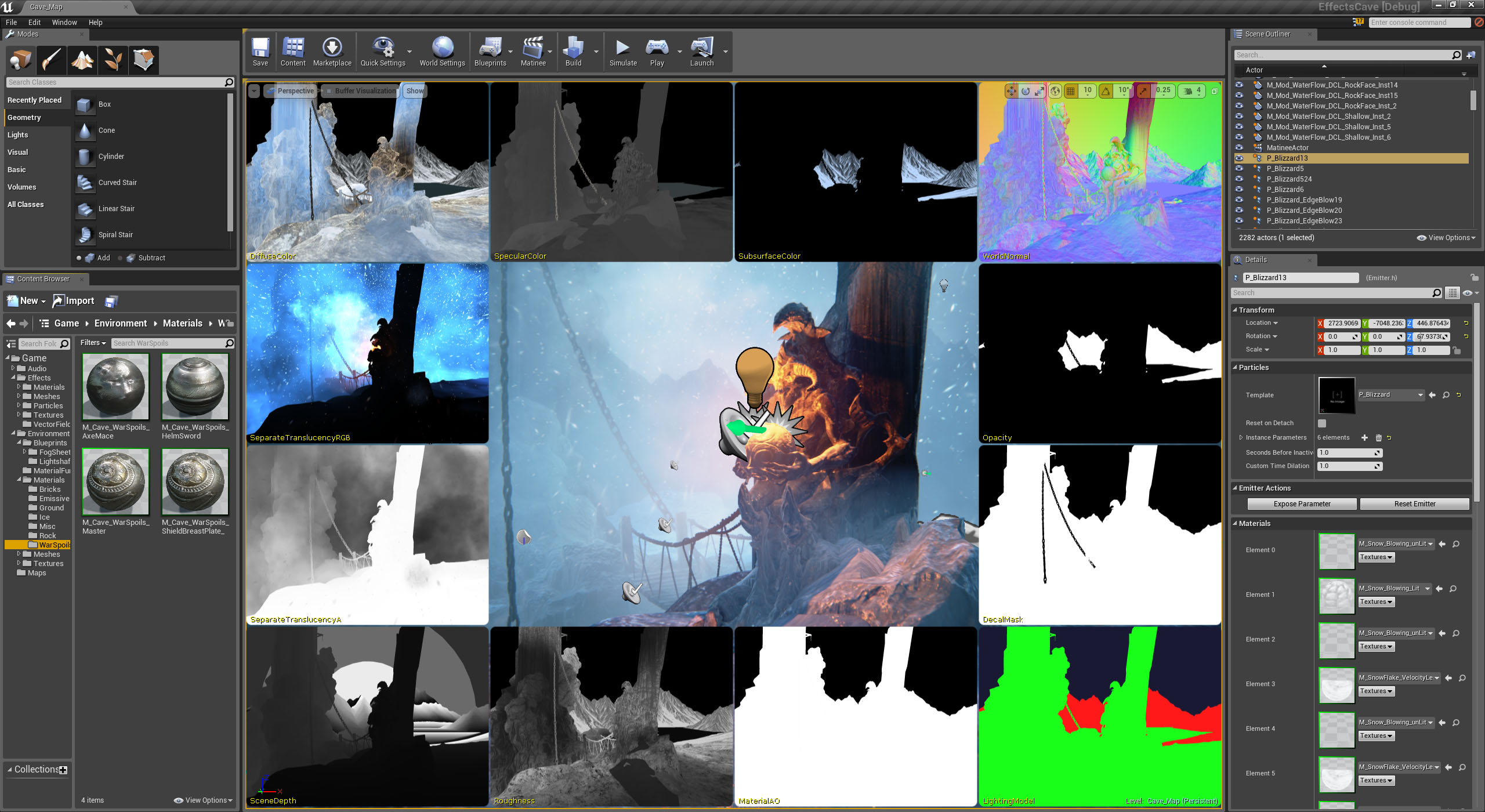

Unreal Engine 4 visualizing a bunch of the rendering buffers.

Networking

Another huge plus for Unreal over Unity to us, is its networking and replication solution. Its overall architecture has been proven over many iterations of the engine and with Epic Games’ Fortnite as well as many other upcoming games utilizing the current iteration, it should be just as solid.

Right out of the box they support dedicated authoritative servers, which most commercial online games require. Even better, they can be compiled to run under Linux. If you need to host a bunch of game instances on a server farm back-end you will see that Windows boxes are almost always much more expensive than Linux boxes using the same hardware. Microsoft’s Azure instances are about 50% more expensive using Windows than using Linux, whereas Amazon EC2 instances using Windows are almost double the price of Linux instances.

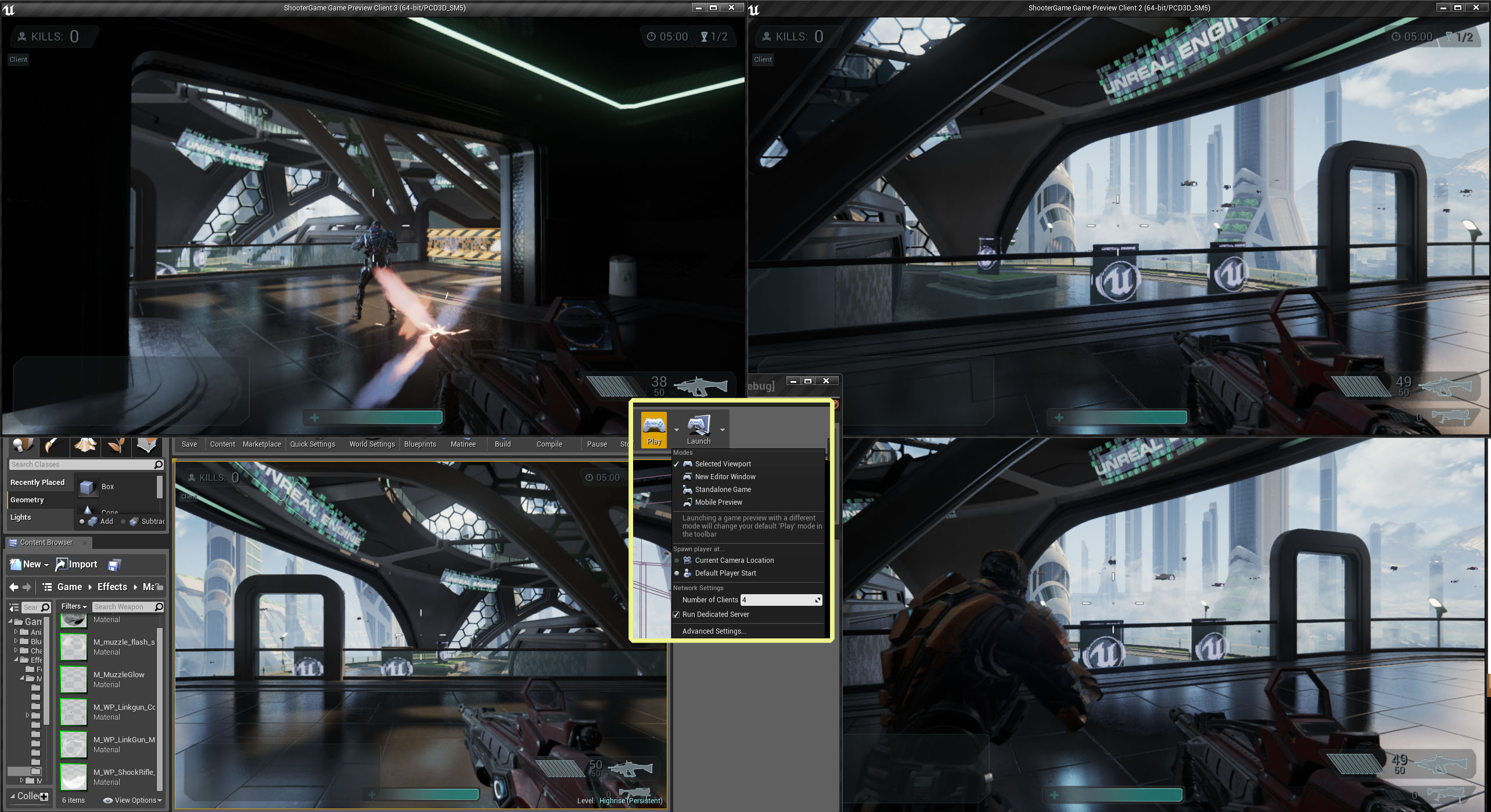

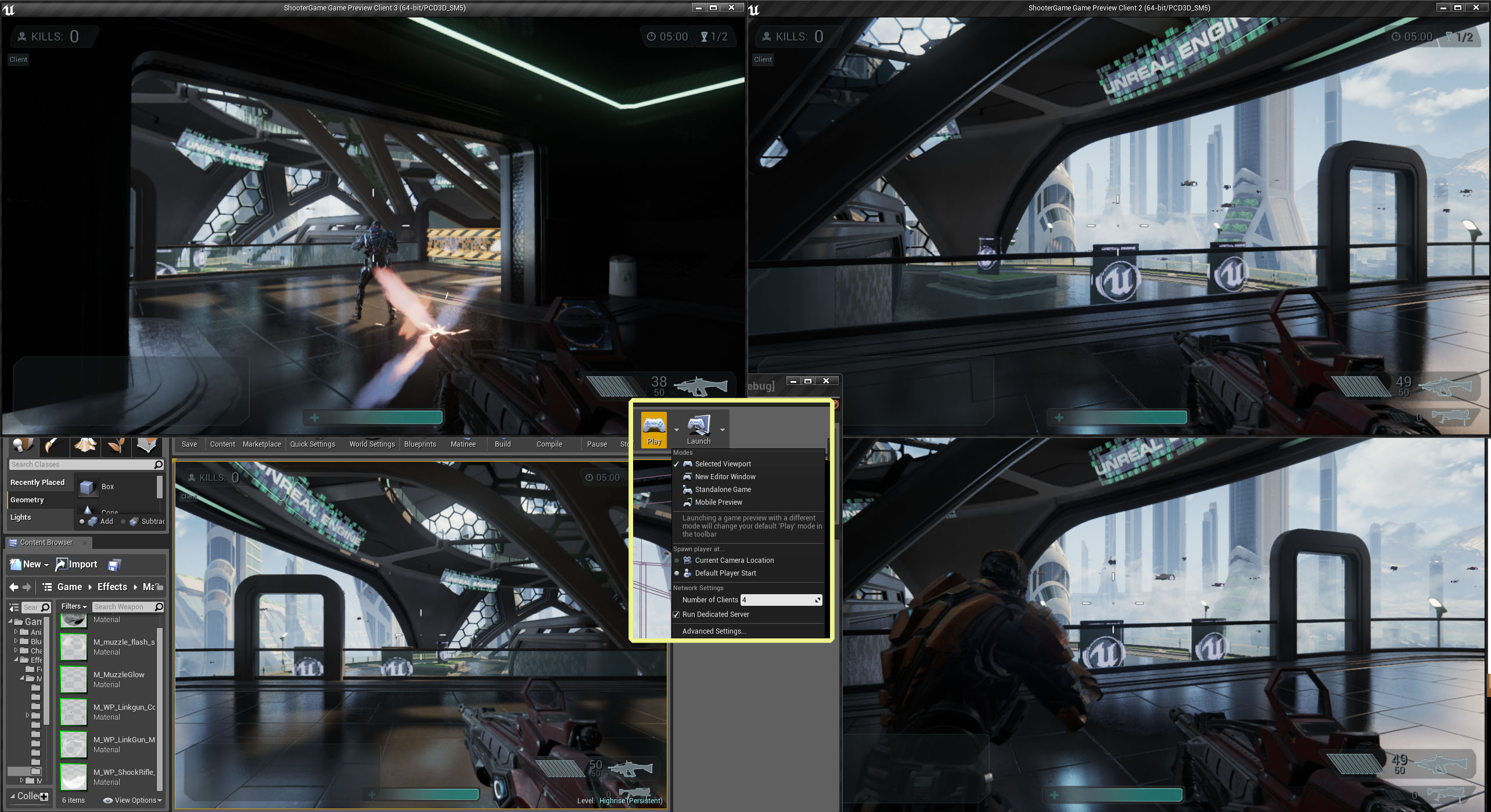

Another nicety is that they have made it fairly easy to run dedicated servers and test multiple users on the one machine, all from within the editor.

Who needs friends? Four way multi-player on the one desktop.

Networking under Unity

Under Unity, from what we could tell, most people ignore the builtin solution (for a bunch of reasons) and instead go for a third party option such as Photon or uLink, but there is a whole spreadsheet of alternatives that the community has put together for comparison. If you want a C++ server, you will be looking at writing your own plugin for Unity and the back-end server yourself.

The solution we were leaning towards was not really ideal. It did support dedicated authoritative servers where we could run our fundamental physics and gameplay simulation on the server, but as it was written in C# this meant we needed to use Mono under Linux and we had been warned that we would see noticeably reduced performance compared to Windows. At the time, gauging by the forums this approach was also mostly untested on Linux. Because of these reasons, as well as the upfront/ongoing costs involved and a bit of misalignment with what we really needed, we budgeted for writing our own solution.

At that stage we also re-evaluated UDK/UE3 because of it’s networking framework, however due to cost and engine limitations we decided against it. Now that UE4 is out, it solves a lot of those issues for us.

Networking resources

If you want to learn more about the basics of Unreal’s networking and replication under Unreal, they have a great long existing UE3 documentation page, as well as a smaller UE4 page and a Networking Tutorials playlist that uses their Blueprints system (though watching those videos, I was a little concerned that the server side of the blueprint might ship with the client, which is far from ideal. I am not sure that is the case however).

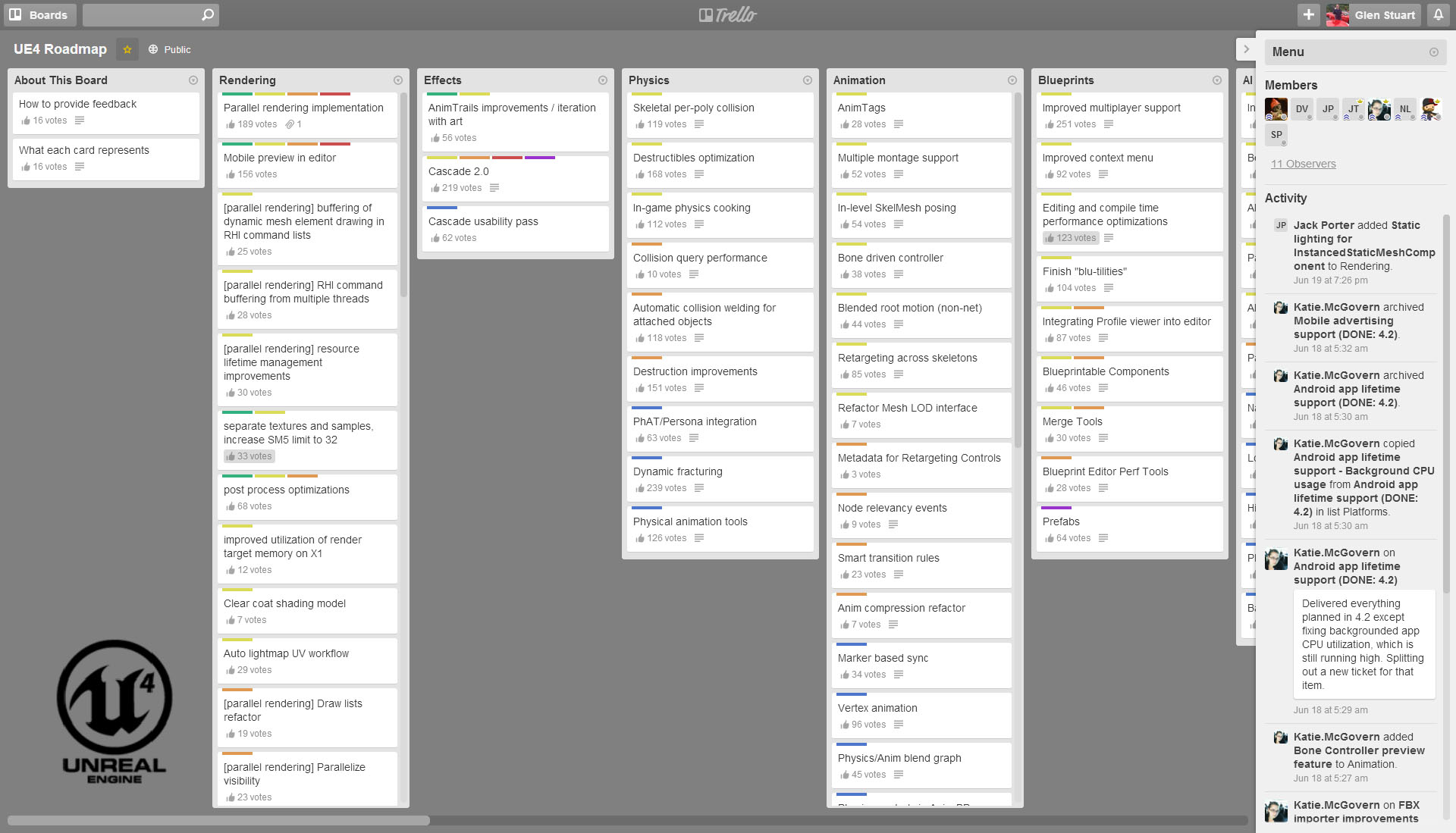

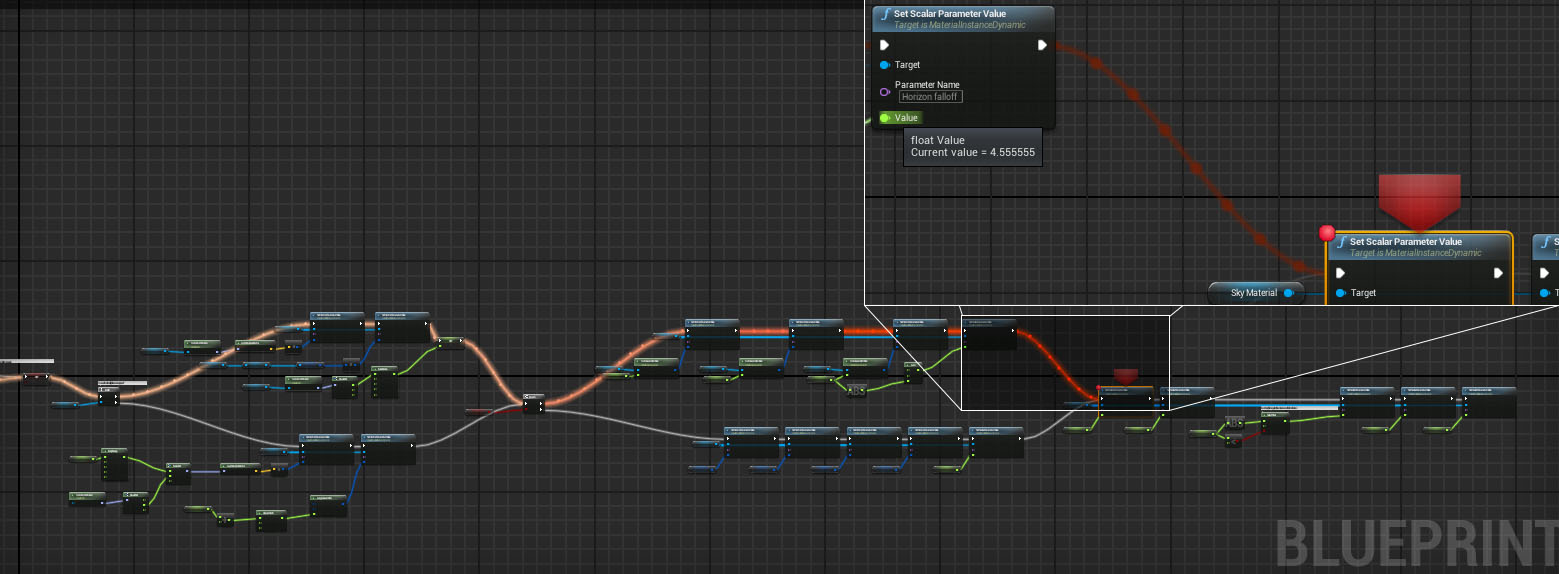

Blueprints

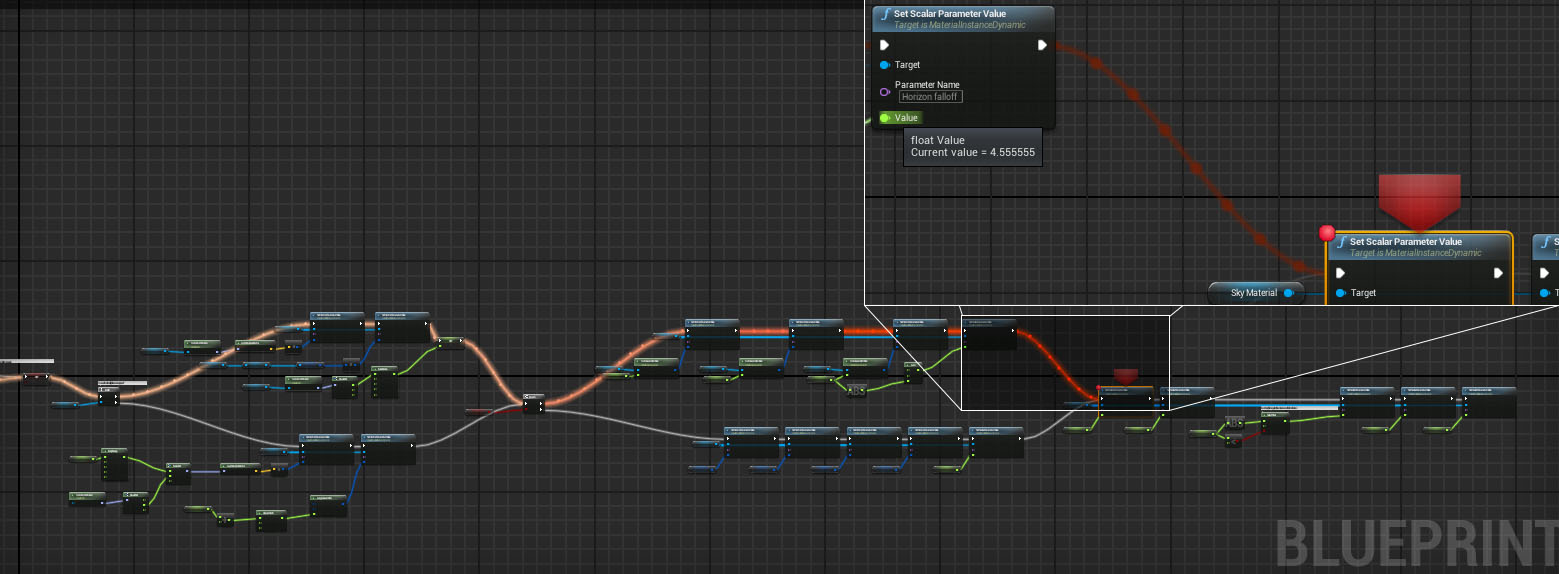

Blueprints would have to be one of the better, well integrated, visual scripting languages I have used. It is fairly clean and easy to use but most of all it would have to be due to their runtime visual debugger. Debugging a visual language without one can be painful. It is also great to see that they are continuing to add features to make the workflow that much better. For anyone struggling to learn C# under Unity – Blueprints could potentially offer the answer.

I have heard that they are roughly a similar cost to UnrealScript at about ~10x slower than writing it in C++ but used correctly that might be mostly mitigated. However, it does make me wonder why they aren’t compiled down to C++ and then ran through an optimizing compiler, in a similar way to the material networks.

If only the links were depicted as strands of spaghetti, we could redefine spaghetti code.

Sample projects

Worth a mention would have to be the amount of high quality, varied, meticulously maintained, example projects that have been coming out of the Marketplace. While learning the engine, I would often load up and refer to the Shooter Game, Strategy Game or Content Examples but I also spent a good amount of time looking over their Elemental Demo and Effects Cave, as well as most of the other projects. Although we aren’t using the vehicle physics released in UE4.2, the Vehicle Game is another top example.

They are all definitely worth a look and you can find supporting documentation on their site.

The rest?

Most of the other core built-in systems such as NavMesh, audio, streaming and the animation tools for Unreal look solid (if not a little FPS/TPS orientated). It also includes CSG tools which are handy for prototyping/blocking out levels.

The Windows XInput implementation is currently missing vibration support, otherwise it works well. They have included a nice plugin framework for adding additional input modules.

The experimental behaviour trees look good. The cinematics tool, Matinee, is cool but is looking to be superseded eventually by a newer system called Sequencer. They are also in the process of improving their UI systems by adding a WYSIWYG system called UMG (Unreal Motion Graphics). It will be built on top of Slate which is used most often for game UI but is also what the Editor itself uses.

I had better leave it here for this post but will cover the rest of the rest next time, including some of the key considerations.